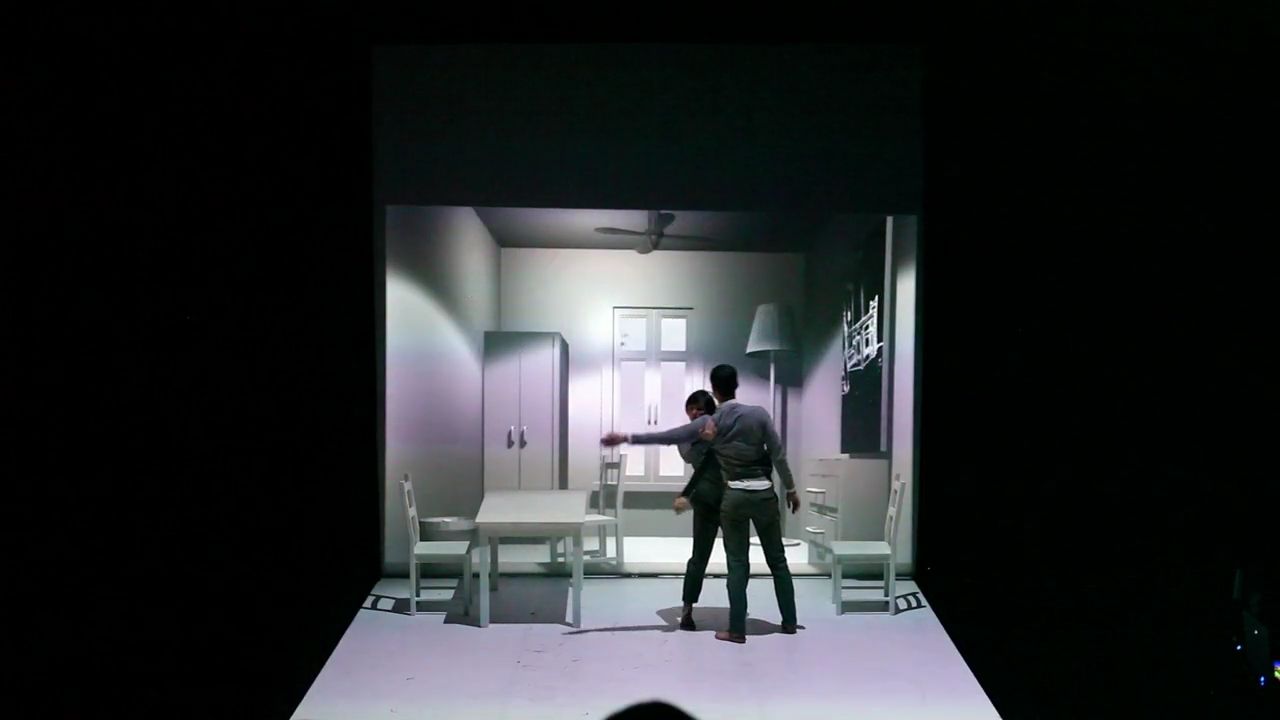

Contemporary dance performance by Anna Abalikhina and Ivan Estegneyev.

Co-production of Atelier de Paris Carolyn Carlson (Paris) and Stanstiya Art Center (Kostroma, Russia).

Media scenography by Curiosity Media Lab (Yan Kalnberzin, Eugene Afonin).

Performed in Paris (Atelier de Paris Carolyn), Moscow (Meyerhold Center, Gogol-Center) and Kostroma (Stantsiya Art Center), 2013-2016.

We first became acquainted with Yan in 2013 when he taught an intensive week-long TouchDesigner workshop in Moscow organized by Plums Fest. Yan came to TouchDesigner with a Houdini background. He says he appreciates the "logic of a 3D context a lot" and in TouchDesigner loves the "realtime performance combined with high procedural possibilities".

Yan describes his personal work as the creation of video for "plastic performances" which he says can be defined as "performances connected with human movement. You could say dance performances, but they are sometimes far from dance!"

The two recent works featured here Living Room Performance and POLINATRON illustrate this point quite clearly. Both performances while very different from each other, each in a masterful, mesmerizing and somewhat polar manner, push what we've seen to date when technologies and processes like gesture recognition, motion-tracking, mapping, video, projection and so on, are used on a live stage for dance and theatrical performance.

Yan's work while playful and experimental (in the sense of creating 'experimental art' and also in regards to allowing what is learned and discovered while making the work to inform the process of making it) shows a profound and mature aptitude for creating startlingly new experiences very fluidly that deliver fantastic surprises.

We asked Yan to tell us a bit about Living Room Performance and POLINATRON, and he very kindly obliged. Enjoy.

Yan Kalnberzin: The performance was created by two dancers - Anna Abalikhina and Ivan Evstegneev. My partner artist-programmer Evgeniy Afonin Netz and I designed and produced the artwork and TouchDesigner patching.

It was an experimental laboratory that took place at the Atelier de Paris directed by Carolyn Carlson who invited us to experiment for two weeks at her atelier to come up with a multimedia dance performance. So we did! The dancers were dancing to realtime mood music provided by the music composer David Monceau and we tried to model some 3D virtual set elements in realtime as well. A lot of ideas just came by chance, evolving during rehearsals and tests.

The idea that dancers can manipulate 3D environments by rotating real objects like a table was a persistent one, so the next day we used an iPad accelerometer to stream data about the table position to TouchDesigner through OSC.

Only then did we start to play with the Kinect, deciding to try some simple stuff, like connecting a point light to a root skeleton joint and making it light virtual 3D space. So when a dancer came closer to the rear screen the light went with them to create an illusion of space in the depths being lit to reveal architectural details 'hidden in darkness'.

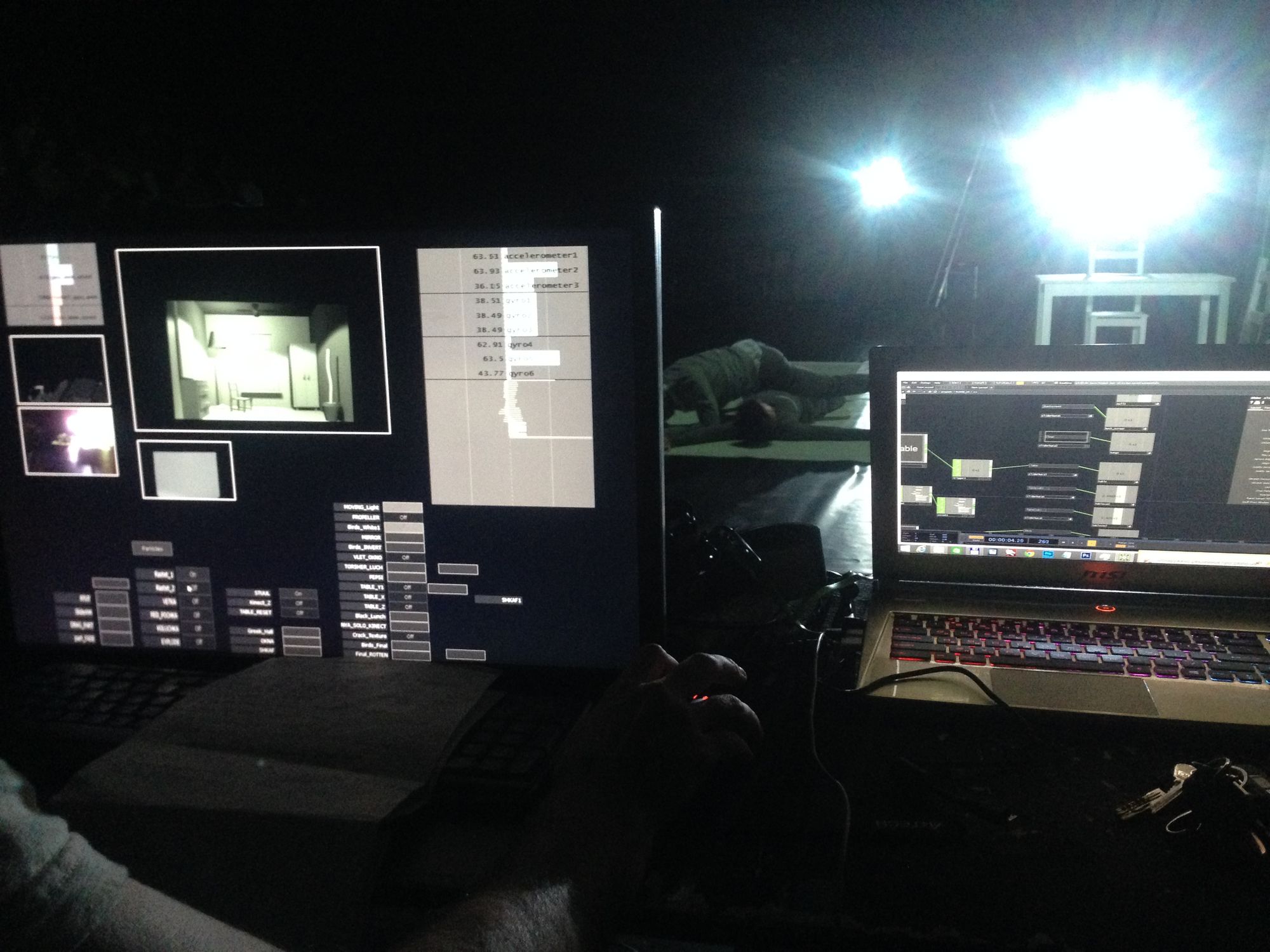

We used two projectors - one for the back rear screen and the other projected from above onto the floor. At some point the patch's frame rate started to decrease so we connected my Alienware and Netz' old Macbook Pro with Windows on it into a local net.

Through Touch In/Out TOPs and CHOPs we exchanged data so that Netz' Mac was dealing only with Kinect calculations and also exporting the floor to the above projector, which I cropped out from the whole render on my machine and sent to him via Touch Out TOP. We didn't have time to make a decent looking patch so it looks quite messy and horrifying!! However it reflects the process of steady realtime patching and works quite ok. People like the show!